Handling large files within Python can end up being a daunting activity, especially when coping with memory limitations and satisfaction issues. However, Python provides a selection of tools plus techniques to proficiently manage and manipulate large files. In the following paragraphs, we’ll explore different strategies and best practices for handling huge files in Python, ensuring that your own code runs easily and efficiently.

one. Understanding Large Documents

Large files can easily consider any data file that is certainly too major to be effortlessly processed in recollection. This might include text files, CSVs, logs, images, or perhaps binary data. Whenever working with big files, it’s essential to understand the effects of file size on performance, memory usage, and files handling.

Why Is It Difficult?

Memory Limitations: Loading a large data file entirely into storage can lead in order to crashes or gradual performance due to restricted RAM.

Performance Concerns: Reading and composing large files can be time-consuming. Optimizing these operations is crucial.

Data Ethics: Ensuring the integrity of data while reading or composing to files is definitely critical, particularly in software that require stability.

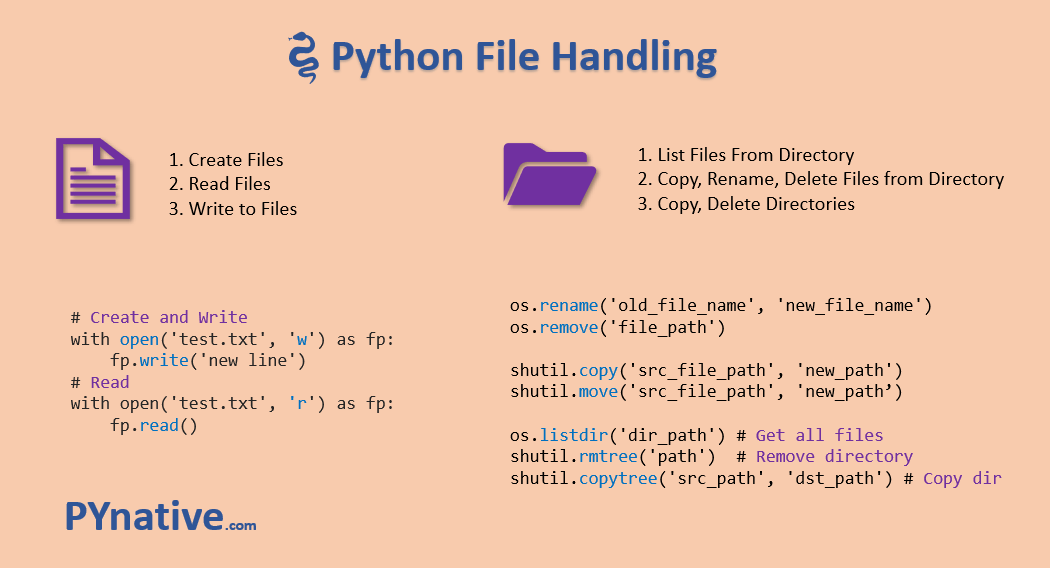

2. click now in Python

Before diving in to handling large data, let’s revisit simple file operations throughout Python:

python

Duplicate code

# Beginning a file

together with open(‘example. txt’, ‘r’) as file:

content material = file. read() # Read whole written content

# Writing to some file

with open(‘output. txt’, ‘w’) as file:

record. write(“Hello, World! “) # Write files to file

Using the with statement will be recommended as this makes sure that files are generally properly closed following their suite finishes, even if an exclusion is raised.

several. Efficient Techniques for Coping with Large Data files

a few. 1. Reading Documents in Pieces

One particular of the most effective ways to deal with large files is to read them throughout smaller chunks. This approach minimizes memory utilization and allows an individual to process information sequentially.

Example: Looking at a File Series by Line

Instead of loading the whole file into memory space, read it line by line:

python

Copy code

together with open(‘large_file. txt’, ‘r’) as file:

regarding line in data file:

process(line) # Exchange with your processing function

Example: Reading through Fixed Size Pieces

You can also read an unique amount of bytes each time, which can turn out to be more efficient intended for binary files:

python

Copy code

chunk_size = 1024 # 1KB

with open(‘large_file. bin’, ‘rb’) as file:

while True:

chunk = record. read(chunk_size)

if not really chunk:

break

process(chunk) # Replace together with your processing functionality

3. 2. Employing fileinput Component

The fileinput module can be helpful any time you want to be able to iterate over lines from multiple insight streams. This is particularly useful if combining files.

python

Copy code

significance fileinput

for collection in fileinput. input(files=(‘file1. txt’, ‘file2. txt’)):

process(line) # Change along with your processing performance

3. 3. Memory-Mapped Data files

For extremely large files, consider using memory-mapped documents. The mmap module allows you to map data straight into memory, helping you to gain access to it as if it were the array.

python

Copy code

import mmap

with open(‘large_file. bin’, ‘r+b’) as n:

mmapped_file = mmap. mmap(f. fileno(), 0) # Map the entire file

# Read data from the memory-mapped file

files = mmapped_file[: 100] # Read first 100 bytes

mmapped_file. close()

Memory-mapped files are very useful for random access patterns found in large files.

three or more. 4. Using pandas for Large Files Files

For structured data like CSV or Excel data files, the pandas library offers efficient strategies for handling big datasets. The read_csv function supports chunking as well.

Example: Reading Large CSV Files in Pieces

python

Copy code

import pandas as pd

chunk_size = 10000 # Range of rows per chunk

for chunk in pd. read_csv(‘large_file. csv’, chunksize=chunk_size):

process(chunk) # Replace with your processing performance

Using pandas furthermore provides a prosperity of functionalities with regard to data manipulation plus analysis.

3. a few. Generators for Significant Files

Generators are usually a powerful solution to handle large data as they produce one item in a time and is iterated over with no loading the whole file into recollection.

Example: Creating a Generator Perform

python

Copy code

def read_large_file(file_path):

with open(file_path, ‘r’) as record:

for line within file:

yield range. strip() # Yield each line

regarding line in read_large_file(‘large_file. txt’):

process(line) # Replace with your current processing function

5. Writing Large Data Successfully

4. one. Writing in Chunks

Much like reading, any time writing large documents, consider writing info in chunks to be able to minimize memory use:

python

Copy signal

with open(‘output_file. txt’, ‘w’) as data file:

for chunk in data_chunks: # Assume data_chunks can be a record of data

document. write(chunk)

4. two. Using csv Component for CSV Data

The csv module provides a simple method to write significant CSV files proficiently:

python

Copy codes

import csv

together with open(‘output_file. csv’, ‘w’, newline=”) as csvfile:

writer = csv. writer(csvfile)

for strip in data: # Assume data will be a listing of rows

article writer. writerow(row)

4. 3. Appending to Documents

If you need to add information for an existing file, open it in append function:

python

Copy computer code

with open(‘output_file. txt’, ‘a’) as file:

file. write(new_data) # Replace with your own new data

your five. Summary

Handling big files in Python requires careful thought of memory utilization and performance. By using techniques such while reading files throughout chunks, using memory-mapped files, and leveraging libraries like pandas, you could efficiently deal with large datasets with no overwhelming your system’s resources. Whether you’re processing text data, CSVs, or binary data, the tactics outlined in this specific article will help you handle large files effectively, guaranteeing that your programs remain performant and responsive.

6. Further Reading

Python Documents on File Dealing with

Pandas Documentation

Python mmap Module

Fileinput Documentation

By adding these techniques into your workflow, you may make one of the most associated with Python’s capabilities and even efficiently handle perhaps the largest regarding files. Happy coding!